In a major strategic shift, OpenAI announced Monday that it will no longer pursue a full for-profit transformation and will instead maintain its original nonprofit governance structure. The decision, which follows months of internal and external pressure, reaffirms the organization’s commitment to building artificial general intelligence (AGI) for the benefit of humanity — not just shareholders.

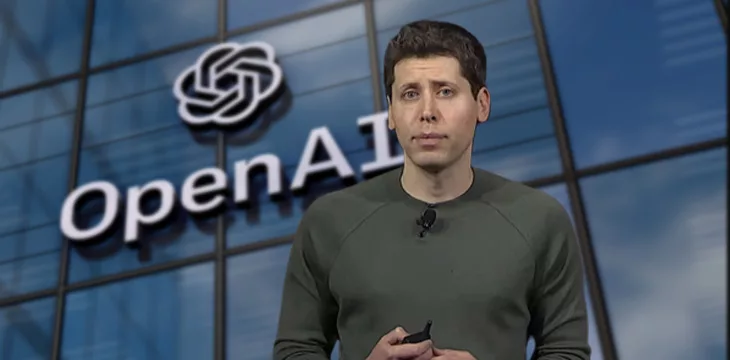

The announcement came in a letter from CEO Sam Altman, who cited conversations with civic leaders and discussions with the Attorneys General of California and Delaware as key factors behind the change. “We made the decision for the nonprofit to stay in control,” Altman wrote, emphasizing a renewed focus on public interest and ethical stewardship of AI technologies like ChatGPT.

OpenAI was originally founded in 2015 as a nonprofit with the ambitious goal of ensuring that AGI — artificial intelligence that can outperform humans across a broad range of tasks — would be developed safely and equitably. Over time, however, the organization layered on a “capped-profit” arm to attract commercial investment and scale operations. That for-profit entity will now be restructured into a public benefit corporation (PBC) — a legally recognized business type that must weigh public impact alongside financial returns.

Bret Taylor, chair of OpenAI’s nonprofit board, clarified that this new structure aims to balance mission and market. “The public benefit corporation model ensures we can grow while staying true to our founding purpose,” he said.

The move comes as OpenAI faces intensifying legal, political, and ethical scrutiny. One major flashpoint is an ongoing lawsuit filed by co-founder Elon Musk, who accused the company and Altman of straying from its original principles. While a federal judge recently dismissed several of Musk’s claims, parts of the case will proceed to trial next year. The lawsuit has amplified a broader debate over whether cutting-edge AI development should be governed by public-interest frameworks or private market incentives.

In addition to legal pressure, OpenAI has come under the microscope from the Attorneys General of California and Delaware — the two jurisdictions where the company operates and is incorporated. Advocacy groups and former employees had petitioned both states’ top law enforcement officials to intervene, arguing that OpenAI’s planned restructuring posed a risk to its charitable mission.

Critics feared a future in which OpenAI — armed with the capability to develop superhuman AI — could shift its focus toward profit maximization at the expense of public safety. These concerns, coupled with growing public reliance on ChatGPT (which now boasts over 400 million weekly users), helped fuel a backlash against the proposed governance changes.

Ultimately, the reversal signals that OpenAI is listening. By recommitting to nonprofit oversight, the company aims to rebuild trust and reinforce its identity as a mission-driven organization — even as it operates at the forefront of one of the world’s most powerful technological revolutions.

Whether this hybrid model can withstand the pressures of a $300 billion valuation and commercial demand remains to be seen. But for now, OpenAI has chosen public accountability over private control — a move that may shape the future of AI governance for years to come.