Harnessing the Brain’s Immune Cells to Stave off Alzheimer’s and Other Neurodegenerative Diseases

Many neurodegenerative diseases, or conditions that result from the loss of function or death of brain cells, remain largely untreatable. Most available treatments target just one of the multiple processes that can lead to neurodegeneration, which may not be effective in completely addressing disease symptoms or progress, if at all.

But what if researchers harnessed the brain’s inherent capabilities to cleanse and heal itself? My colleagues and I in the Lukens Lab at the University of Virginia believe that the brain’s own immune system may hold the key to neurodegenerative disease treatment. In our research, we found a protein that could possibly be leveraged to help the brain’s immune cells, or microglia, stave off Alzheimer’s disease.

This article was republished with permission from The Conversation, a news site dedicated to sharing ideas from academic experts. It represents the research-based findings and thoughts of, Kristine Zengeler, Ph.D. Candidate in Neuroscience, University of Virginia.

Challenges in Treating Neurodegeneration

No available treatments for neurodegenerative diseases stop ongoing neurodegeneration while also helping affected areas in the body heal and recuperate.

In terms of failed treatments, Alzheimer’s disease is perhaps the most infamous of neurodegenerative diseases. Affecting more than 1 in 9 U.S. adults 65 and older, Alzheimer’s results from brain atrophy with the death of neurons and loss of the connections between them. These casualties contribute to memory and cognitive decline. Billions of dollars have been funneled into researching treatments for Alzheimer’s, but nearly every drug tested to date has failed in clinical trials.

Another common neurodegenerative disease in need of improved treatment options is multiple sclerosis. This autoimmune condition is caused by immune cells attacking the protective cover on neurons, known as myelin. Degrading myelin leads to communication difficulties between neurons and their connections with the rest of the body. Current treatments suppress the immune system and can have potentially debilitating side effects. Many of these treatment options fail to address the toxic effects of the myelin debris that accumulate in the nervous system, which can kill cells.

A New Frontier in Treating Neurodegeneration

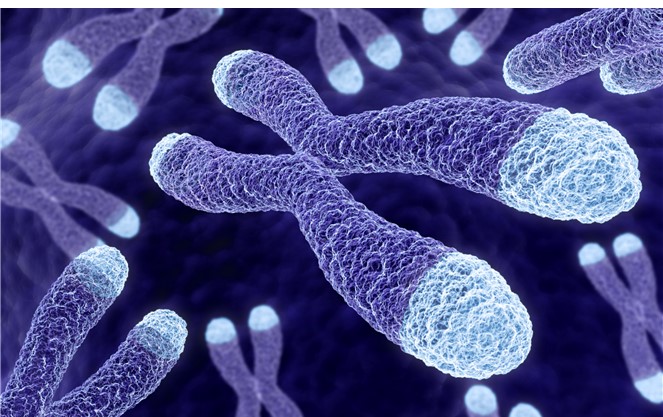

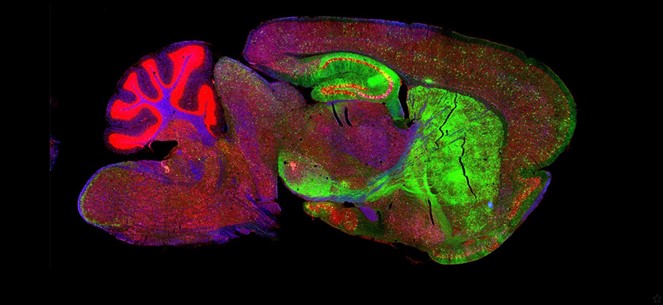

Microglia are immune cells masquerading as brain cells. In mice, microglia originate in the yolk sac of an embryo and then infiltrate the brain early in development. The origins and migration of microglia in people are still under study.

Microglia play important roles in healthy brain function. Like other immune cells, microglia respond rapidly to pathogens and damage. They help to clear injuries and mend afflicted tissue, and can also take an active role in fighting pathogens. Microglia can also regulate brain inflammation, a normal part of the immune response that can cause swelling and damage if left unchecked.

Microglia also support the health of other brain cells. For instance, they can release molecules that promote resilience, such as the protein BDNF, which is known to be beneficial for neuron survival and function.

But the keystone feature of microglia are their astounding janitorial skills. Of all brain cell types, microglia possess an exquisite ability to clean up gunk in the brain, including the damaged myelin in multiple sclerosis, pieces of dead cells and amyloid beta, a toxic protein that is a hallmark of Alzheimer’s. They accomplish this by consuming and breaking down debris in their environment, effectively eating up the garbage surrounding them and their neighboring cells.

Given the many essential roles microglia serve to maintain brain function, these cells may possess the capacity to address multiple arms of neurodegeneration-related dysfunction. Moreover, as lifelong residents of the brain, microglia are already educated in the best practices of brain protection. These factors put microglia in the perfect position for researchers to leverage their inherent abilities to protect against neurodegeneration.

New data in both animal models and human patients points to a previously underappreciated role microglia also play in the development of neurodegenerative disease. Many genetic risk factors for diseases like Alzheimer’s and multiple sclerosis are strongly linked to abnormal microglia function. These findings support an accumulating number of animal studies suggesting that disruptions to microglial function may contribute to neurologic disease onset and severity.

This raises the next logical question: How can researchers harness microglia to protect the nervous system against neurodegeneration?

Engaging the Magic of Microglia

In our lab’s recent study, we keyed in on a crucial protein called SYK that microglia use to manipulate their response to neurodegeneration.

Our collaborators found that microglia dial up the activity of SYK when they encounter debris in their environment, such as amyloid beta in Alzheimer’s or myelin debris in multiple sclerosis. When we inhibited SYK function in microglia, we found that twice as much amyloid beta accumulated in Alzheimer’s mouse models and six times as much myelin debris in multiple sclerosis mouse models.

Blocking SYK function in the microglia of Alzheimer’s mouse models also worsened neuronal health, indicated by increasing levels of toxic neuronal proteins and a surge in the number of dying neurons. This correlated with hastened cognitive decline, as the mice failed to learn a spatial memory test. Similarly, impairing SYK in multiple sclerosis mouse models exacerbated motor dysfunction and hindered myelin repair. These findings indicate that microglia use SYK to protect the brain from neurodegeneration.

But how does SYK protect the nervous system against damage and degeneration? We found that microglia use SYK to migrate toward debris in the brain. It also helps microglia remove and destroy this debris by stimulating other proteins involved in cleanup processes. These jobs support the idea that SYK helps microglia protect the brain by charging them to remove toxic materials.

Finally, we wanted to figure out if we could leverage SYK to create “super microglia” that could help clean up debris before it makes neurodegeneration worse. When we gave mice a drug that boosted SYK function, we found that Alzheimer’s mouse models had lower levels of plaque accumulation in their brains one week after receiving the drug. This finding points to the potential of increasing microglia activity to treat Alzheimer’s disease.

The Horizon of Microglia Treatments

Future studies will be necessary to see whether creating a super microglia cleanup crew to treat neurodegenerative diseases is beneficial in people. But our results suggest that microglia already play a key role in preventing neurodegenerative diseases by helping to remove toxic waste in the nervous system and promoting the healing of damaged areas.

It’s possible to have too much of a good thing, though. Excessive inflammation driven by microglia could make neurologic disease worse. We believe that equipping microglia with the proper instructions to carry out their beneficial functions without causing further damage could one day help treat and prevent neurodegenerative disease.